Business Use case

Infineon, the largest German semiconductor manufacturer, wanted to update their Manufacturing Execution Systems (MES) by changing the database and replace the corresponding application that was rewritten by Systema, a software house that specialized in MES.

The customer’s senior management decided that the aging Adabas and Natural system had to be replaced, and the goal was to accomplish this within three years for three production sites.

In a preliminary Proof of Concept (PoC), the Infineon team selected MS SQL Server as the new database system.

In total, three production systems had to be migrated together with a couple of different sub systems. The related systems for development and testing also needed to be considered in the customer’s plans.

The customer’s primary question during the planning stage was, “Do we try doing the switch all at once, in a big bang, or should we go with a phased approach?” The big bang transition seemed too risky for the customer’s production goals, so the decision was made to plan a phased, incremental migration with functional areas defined. Advantages of the incremental approach were that a fall-back plan could be put in place to address any problems, smaller package sizes were defined (safe harbors), and results were easily monitored. Additionally, there is no downtime for the production system while data replication is occurring.

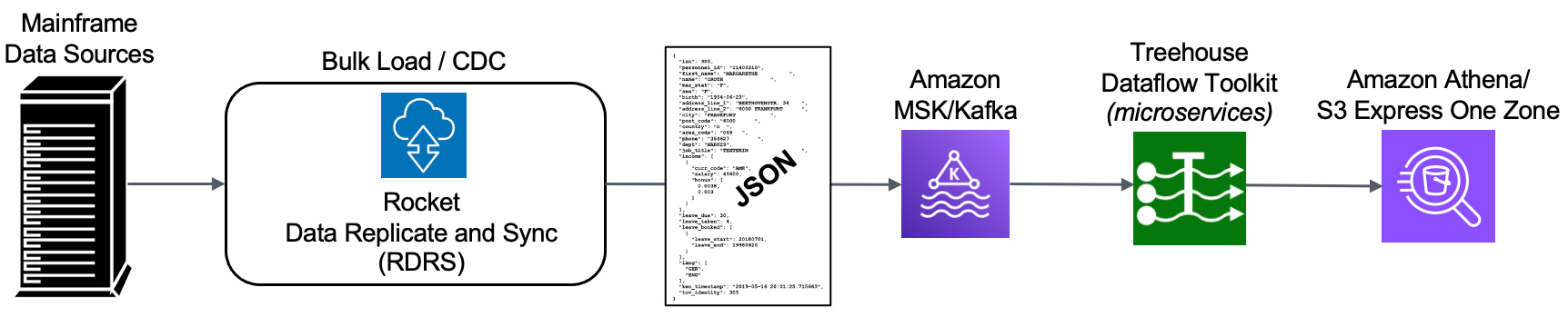

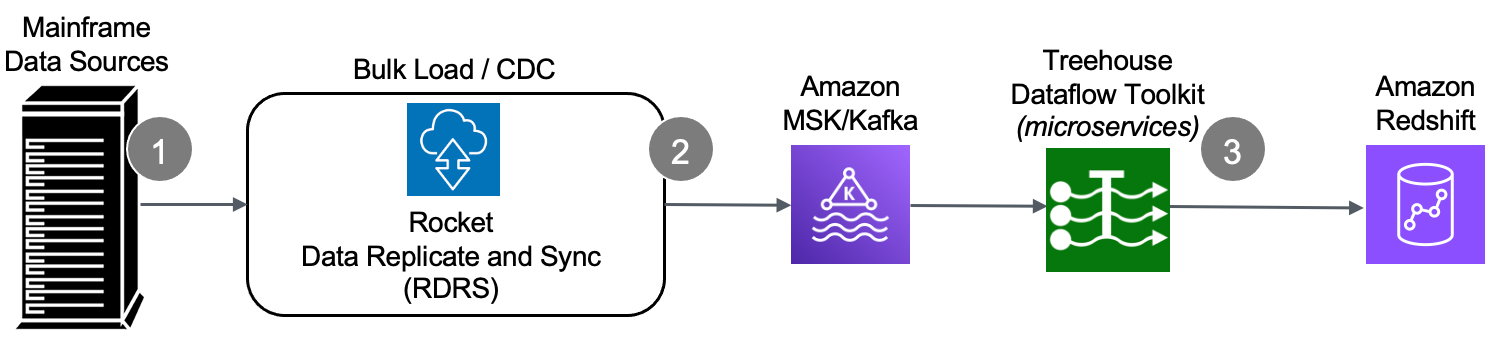

After setting up a workflow with all the necessary actions, it turned out that a sequential approach was very time-consuming, so the next question was, “How can we parallelize tasks?” The answer was to find a product that supports real-time replication of data from the old DB to the new DB, so production will not be interrupted and migrated parts of the application are already run on the new DB, while non-migrated parts of the application are still running on the old DB.

The software must also guarantee co-existence between the currently used Adabas database and the new MS SQL Server database. And the software must support the change from the non-SQL Adabas DB structures to a MS SQL Server DB with normalized structures.

Bi-directional replication is another requirement, because during a certain phase in the migration, updates must be replicated in both directions.

The Technology Solution...

Infineon contacted Treehouse Software in 2020 to discuss Rocket Data Replicate and Sync (RDRS), the product formerly called tcVISION, and set up a presentation and discussion. When the session showed promise for RDRS, a PoC was scheduled to demonstrate if the above-mentioned use case and requirements could be handled by RDRS. The first part of the PoC ran with Oracle, which was Infineon’s initial choice of target RDBMS and afterwards the teams tested with MS SQL Server, which was Infineon’s final choice. The PoC consisted of a 3-phase migration model, transformation capabilities, performance testing, bi-directional replication, and various other requirements. The PoC produced successful results.

Additionally, with RDRS, no replication efforts were needed in the application and a safe switchover was achieved.

Interestingly, Infineon went with the most complicated scenario first, so they could identify any difficulties early on in the project. Infineon and Treehouse technical teams fulfilled the use cases and requirements requested by Infineon senior management in order to move into production.

About Treehouse Software

Since 1982, Treehouse Software has been serving enterprises worldwide with industry-leading software products and outstanding technical support. Today, Treehouse Software is a global leader in providing data replication, and integration solutions for the most complex and demanding heterogeneous environments, as well as feature-rich, accelerated-ROI offerings for information delivery, and application modernization. Please contact Treehouse Software at sales@treehouse.com with any questions. We look forward to serving you in your modernization journey!

How does it work?

How does it work?